We Are Not an AIOps Company

In our young history as a company, we have had the good fortune of speaking to well over 100 of the world’s largest enterprises as potential customers, and one of the more interesting dialogues we get into with customers is on the subject of AIOps. This usually comes up when a prospective customer has been using an AIOps tool like BigPanda or Moogsoft, and it becomes immediately clear that the customer definition of “AIOps” very much is a venn diagram that perfectly overlaps with the core competencies and feature sets of the existing AIOps tools. So what are those key features that customers equivocate with the term “AIOps” and why should we care?

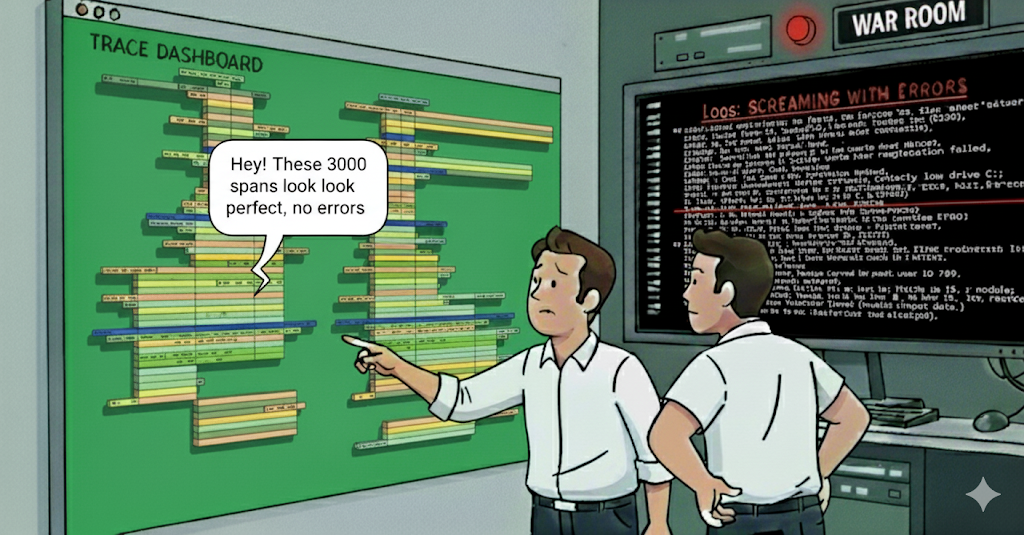

We’ll get to the “why should we care” question a bit later, but first, when we tried to identify the core features that customers rely on existing AIOps tools like BigPanda and Moogsoft for, we largely concluded that the core function they were serving was alert denoising. Alert denoising refers to the combined ability to deduplicate and cluster alerts that may be related. In these types of platforms, alerts are typically combined into a “situation,” an “incident,” or an “event.” Ask any developer or SRE that deals with IT operations, and you’ll soon understand that there is little doubt that alert fatigue is a thing. Most enterprise technical organizations have set up robust alerting, almost to a fault. In other words, the longstanding best practice of implementing alerting when critical infrastructure, services, workflows and/or components cross some quantitative threshold is pervasive, prolific, and oftentimes hypersensitive. What this means in practice is lots of alerts. Which ones to respond to? Which ones to ignore? Which ones are false positives, which ones are coupled with some known trend or seasonality? Which ones are a symptom and which ones may be causal? What was established as a well-intentioned mechanism to help developers get ahead of potentially catastrophic issues has, in practice, become a “boy who cried wolf” phenomena - many teams have basically developed their own unwritten mental heuristics for determining what needs action and what can be ignored.

Knowing this, there is clearly value in denoising and enriching alerts with more actionable information; in theory, this should lead to faster identification of real issues and faster remediation. But here is where the conversation becomes more multifaceted:

- What really is the “AI” in AIOps?

- How can and will GenAI play a role?

- Finally, is AIOps an outdated moniker? Why or why not?

What really is the “AI” in AIOps?

Flip AI calls itself a GenAI-native observability platform. What do we mean when we say this? What we primarily mean here is that we deliver the “understanding” layer, if you will, to the raw observability data. Our entire offering is built on the foundations of large language models that we have developed and refined in-house. They are the engine that powers the output our customers see - well-articulated, reasonable root-cause analyses for their production incidents. We have gone to great lengths in articulating how we’ve done this in our white paper here. How does this differ from the older, incumbent AIOps tools like BigPanda and Moogsoft? The simplest answer to this is that Flip AI can work well right out of the box. From what our prospective customers have told us, the incumbent AIOps tools impose steep requirements from their customers because of older machine learning methodologies that are being employed. This takes the form of asking customers to conform their data to rigid data taxonomies, perhaps requiring them to label or annotate data, all of which extends the time to value for AIOps platforms. And of course, alert configurations are not static - new ones are being put in place, old ones are being deprecated, existing ones are being modified, which means a near-constant investment in data cleanliness and taxonomical rigidity when it comes to extracting value.

On the other hand, Flip AI does not require any investment from the customer when it comes to data “readiness” - no annotating, no labeling, no conforming to fixed data schemas. Flip AI interrogates all of the underlying observability data within the incident window much like a seasoned developer would. From there, our LLMs logically construct a story by connecting the dots between all of the underlying observability data necessary to support a probable root cause. Flip AI takes on the most time-consuming and humanly challenging part of the debugging process - the finding, sifting, interpreting, correlating and making sense of all the telemetry connected to an incident. We don’t simply stop at intelligent alert grouping.

While much of this was likely just a timing issue - AIOps platforms used machine learning technologies and techniques available to them at the time - this was not the promise of AI as our customers understood it. We believe that the AIOps players did their best with the technology available to them, but to tackle a more narrow problem. Yet it failed to materialize in a big way. Time and again, we see leaps in technology help solve a much larger problem that is more aligned with the customer's intent. The question now is, with the advent of large language models, will customers reorient their understanding of what AIOps means?

The role of GenAI

With the advent of large language models and smaller domain specific models, machine learning has achieved a step-function improvement in its ability to contextualize unstructured and semi-structured data. From an ITOps perspective, what this means in practice is that applying machine learning to different problem sets, such as alert denoising, incident management, root cause analysis, etc. should not impose any data cleansing or MLOps investments from customers. For observability and AIOps technology providers that take the right approach to implementing LLMs, time to value for customers can be drastically reduced. And let us note, taking the “...right approach to implementing LLMs” is not for the faint of heart. Contrary to what “AI influencers” on LinkedIn may be spouting about how easy it is to simply fine-tune general purpose models (a laughable assertion), this requires deep ML expertise, and for observability incumbents, it requires the courage to disrupt yourself. This is not the same thing as a slap-dash chat or text summarization implementation of an off-the-shelf general purpose model, which is what we mostly see across observability providers today. As a technology provider, LLMs should be thought of not as a new tool in your existing toolbox, but almost as an entirely new chassis, upon which new ITOps experiences can and should be imagined.

Because of our novel approach to using GenAI in solving some of ITOps’ most vexing pain points, we understand the reflex of prospective customers to lump us in the same bucket as the incumbent AIOps players. Perhaps long ago, some of the same assertions we confidently make today were made by our predecessors, something our prospective customers are naturally skeptical of. But it is incumbent upon the new generation of GenAI-native platforms, like Flip AI, to educate our customers with empathy and patience, to help them understand how underlying shifts in machine learning technology have drastically improved the ability to meet the original promise of AIOps.

So, is AIOps an outdated moniker?

I suppose it depends on who you ask. To a community of large global enterprises, who have been exposed to some of the traditional AIOps platforms, and who now see what companies like Flip AI are capable of, it may be like comparing apples to oranges. Consolidating and clustering potentially related alerts were the bread and butter of the incumbent AIOps platforms. This required a hefty investment of time and data conformity from customers, which increased the time to value. In all of our conversations with large enterprises, this is largely how they view AIOps. For better or worse, the moniker now carries with it a certain narrative and weight.

Leveraging the power of LLMs in improving ITOps across a number of facets - intelligent alert clustering, cross-system, cross-modality data correlation, root cause analysis, incident forecasting and prediction, and even self-healing - is this the new AIOps? Or do we need to develop new language, new shorthand jargon and nomenclature to help our customers better differentiate? We leave this decision to market forces, to crafty marketers and public relations folks, to the analysts who think deeply about the best way to articulate whether new technologies fit existing categories or whether new categories are being born before our eyes. And we leave it to you, the reader, to make up your own mind about this exciting new frontier of GenAI and ITOps.